Building a Two-Tower Search Query Retrieval Model: A Practical Guide

Two-Tower models provide a powerful, scalable approach to retrieval tasks in recommendation systems.

In today's data-driven world, recommendation systems play a crucial role in enhancing user experience across various platforms. Whether it's e-commerce websites suggesting products, social media platforms recommending content, or search engines retrieving relevant information, the underlying technology often relies on sophisticated retrieval models. One such powerful architecture is the Two-Tower model, which has gained significant popularity for its scalability and effectiveness.

What Are Two-Tower Models?

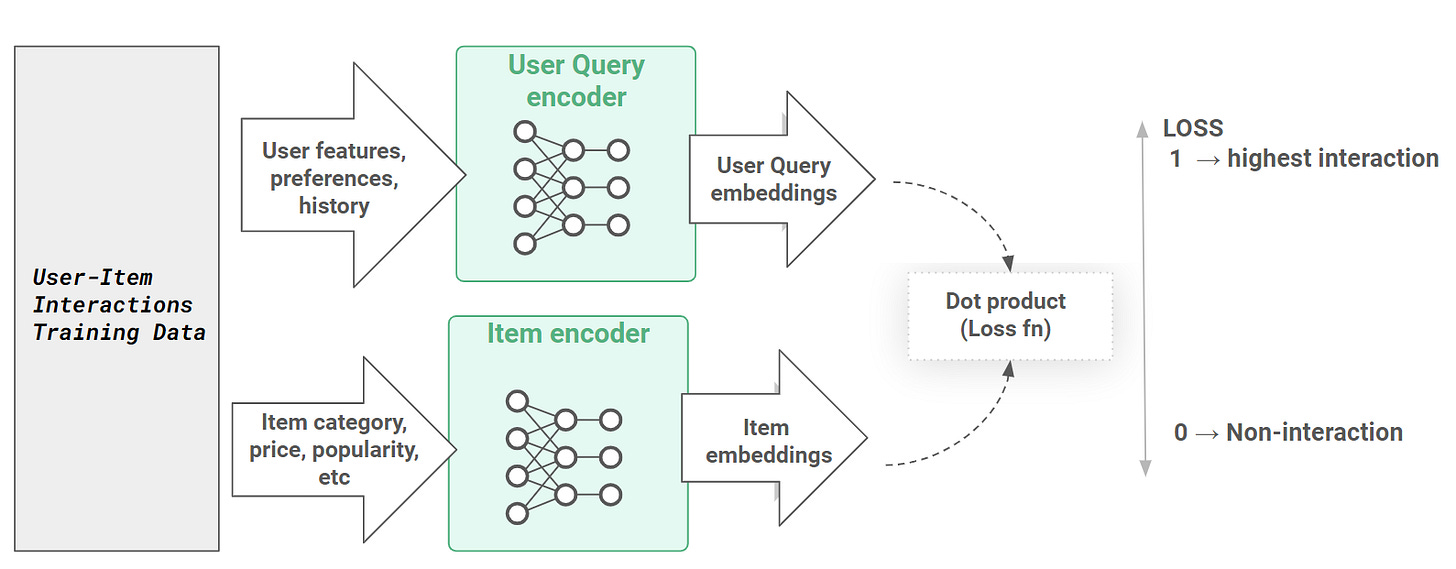

Two-Tower models are deep learning architectures specifically designed for retrieval tasks in recommendation systems. As the name suggests, they consist of two separate neural network "towers":

Query Tower: Processes user queries or user information

Item Tower: Processes item information (products, documents, videos, etc.)

Each tower independently transforms its inputs into embeddings (dense vector representations), which are then compared using similarity metrics to determine relevance.

Versatility of Two-Tower Models

The beauty of Two-Tower models lies in their versatility. The two towers can represent various entity pairs:

Query-Product: In e-commerce (search for shoes, get relevant footwear)

Query-Document: In knowledge bases (search Wikipedia, get relevant articles)

Query-Person: In professional networks (search LinkedIn, find relevant profiles)

User-Product: For personalized product recommendations

User-Content: For personalized content feeds (Instagram, YouTube)

User-User: For friend/connection recommendations (LinkedIn, Twitter)

Why Companies Use Two-Tower Models at Scale

Efficiency and Scalability

Two-Tower models offer significant advantages for large-scale applications:

Offline Computation: Item embeddings can be pre-computed and stored, reducing real-time computation needs.

Fast Retrieval: When a user submits a query, only the query embedding needs to be computed in real-time.

Approximate Nearest Neighbor (ANN) Search: Fast similarity search techniques can quickly find the most relevant items from millions of options.

Generalization Capability

Neural networks can generate meaningful embeddings even for items or queries not seen during training, allowing the system to handle new items gracefully.

Implementing a Two-Tower Model: A Practical Approach

Let's walk through the implementation of a basic Two-Tower model using the Amazon search query dataset.

The Dataset

We'll use the Amazon search query dataset, which contains:

Queries (what users searched for)

Products (items in the catalog)

Relevance labels (exact match, substitute, complement, irrelevant)

Product features (title, description, bullet points, brand, color)

Model Architecture

Our Two-Tower model will consist of:

Query Tower:

Takes query text as input

Converts it to embeddings using a pre-trained model

Passes through dense layers to create a condensed representation

Product Tower:

Takes product features as input (title, description, bullet points, brand, color)

Converts each feature to embeddings

Concatenates these embeddings

Passes through dense layers to create a condensed representation

Similarity Computation:

L2 normalization of both tower outputs

Dot product computation (equivalent to cosine similarity after normalization)

Binary cross-entropy loss for training

Implementation Steps

Data Preparation:

Convert all text to lowercase

Handle missing values

Generate embeddings for queries and product features using a pre-trained Sentence Transformer model

Model Definition:

Define input layers for query and product features

Create dense layers to condense the embeddings (from 768 to 16 dimensions)

Add L2 normalization layers

Compute dot product for similarity

Training:

Use binary cross-entropy loss

Treat exact matches as positive examples (label 1)

Treat irrelevant matches as negative examples (label 0)

Implement model checkpointing to save the best model

Inference:

Extract the normalized query and product embeddings

Perform approximate nearest neighbor search to find relevant items for new queries

Testing the Model

After training, we can test our model with new queries like:

"Red dress for wedding"

"Best laptop"

"Nike running shoes"

"iPhone 13"

The model successfully retrieves relevant products, such as:

Wedding dresses and accessories for "Red dress for wedding"

Nike women's running shoes for "Nike running shoes"

Wireless chargers for "charger for smartphone"

Potential Improvements

While our basic implementation works well, several enhancements could improve performance:

Additional Features:

Include numerical features like price, ratings, and reviews

Add query-specific features like query length and popularity

Larger Training Dataset:

Training on more examples would improve embedding quality

Advanced Techniques:

Implement adaptive mimic mechanisms

Add category alignment loss

Explore personalization options based on user behavior

Conclusion

Two-Tower models provide a powerful, scalable approach to retrieval tasks in recommendation systems. By learning meaningful embeddings for both queries and items, these models can efficiently retrieve relevant results from massive catalogs in real-time.

The implementation we've explored demonstrates how to build a basic Two-Tower model from scratch, but there's significant room for customization and improvement based on specific use cases and requirements.

Whether you're building a search system, a recommendation engine, or any application requiring efficient retrieval from large datasets, the Two-Tower architecture offers a solid foundation to build upon.

Happy modeling!

Have you implemented recommendation systems before? What challenges did you face? Share your experiences in the comments below!